PHR Conic Augmented Lagrangian Method

Slide: Starting from the penalty method, we extend to the augmented Lagrangian method for improved stability. By introducing

Introduction

Penalty Method

Consider the constrained optimization problem:

Penalty method solves a series of unconstrained problems:

Challenge:

- Requires

for exact solution, causing ill-conditioned Hessian. - Finite

leads to constraint violation .

Lagrangian Relaxation

The Lagrangian is defined as:

At the optimal solution

Uzawa's method iteratively updates

where

However, when

can be non-smooth even for smooth and . may be unbounded or have no finite solution.

Equality Constraint

PHR Augmented Lagrangian Method

Consider the optimization problem with a penalty on the deviation from a prior

The inner problem:

The outer problem:

PHR Augmented Lagrangian Method (cont.)

To increase precision:

- Reduce the penalty weight

- Update the prior multiplier

Uzawa's method for the augmented Lagrangian function is:

Penalty Method Perspective

The corresponding primal problem of the augumented Lagrangian Function is obviously:

Advantages:

- Even without

, the constraints can be exactly satisfied in the limit through multiplier updates. - For large

, the penalty term dominates, ensuring has a local solution. - The augmented dual function

is smooth in proper conditions, with .

Practical PHR-ALM

In practical, we use its equivalent form:

The KKT solution can be solved via:

where

Inequality Constraint

Slack Variables Relaxation

Consider the optimization problem with inequality constraints:

We use the equivalent formulation using slack variables:

where

We can directly form Lagrangian like equality-constrained case

Simplified Form

Summing over all components gives the final form:

For the dual update, from the optimality condition:

Summary

PHR Augmented Lagrangian Method for General Nonconvex cases:

Its PHR Augmented Lagrangian is defined as

The PHR-ALM is simply repeating the primal descent and dual ascent iterations:

Courtesy: Z. Wang

Symmetric Cone Constraint

Extension to Symmetric Cone Constraints

Consider the symmetric cone constrained optimization problem:

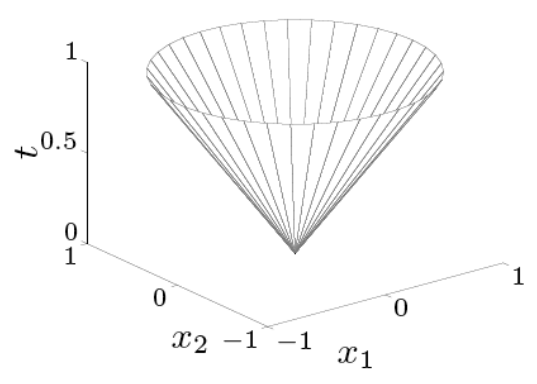

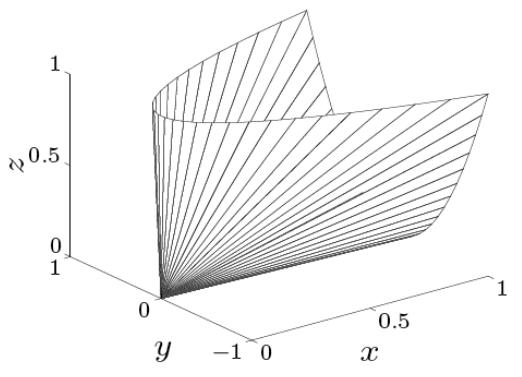

| Second Order Cone | Positive Definite Cone |

|---|---|

|  |

Generalized Inequality Constraint

For the symmetric cone constraint

The standard inequality constraint

t's projection operator is exactly element-wise max function:

Slack Variables Relaxation

Consider the optimization problem with inequality constraints:

By Euclidean Jordan algebra, the conit program is equivalent to

We can directly form Lagrangian like equality-constrained case

Simplified Form

Let

For the dual update, from the optimality condition:

where

Summary

PHR Augmented Lagrangian Method for General Nonconvex cases:

Its PHR Augmented Lagrangian is defined as

The PHR-ALM is simply repeating the primal descent and dual ascent iterations: